Experiments

Tech we’re looking forward to using in 2020

Oct18

Shared By Gian Pablo Villamil

At Britelite, we stand out by bringing together technology and design to create magic for our customers. Last year we broke new ground in using wearables, embedded systems, and even beer pouring robots.

However, we are always on the lookout for new and exciting tech, and have been keeping a close eye on recent conferences and announcements. Here are some new tools we look forward to using in 2020:

Augmented Reality

Slowly but surely, Augmented Reality is gaining ground as a way to create powerful experiences for customers. At this year’s Augmented World Expo, we saw many examples of enterprise oriented AR.

None of this tech works in isolation, so it is important to have it supported in the tools that we already use. For example, we use Unity a lot in our projects, and it now supports all of the tools that we are really looking forward to.

Here is some of the new tech that we have in our sights for 2020:

ARKit 3.0 and Reality Composer: Apple continues to enhance AR features in iOS, and the upcoming release of ARKit 3.0 with iOS 13 should bring some dramatic capabilities. Most striking is “People Occlusion”, which allows AR content to appear in front or behind people in the scene. Also noteworthy is the ability to detect poses of people in the scene, and the ability to create shared worlds.

A teaser of this, and other features, in action appears in Microsoft’s Minecraft Earth demo and announcement - presented here by CEO Satya Nadella!

As part of the AR enhancements, there is now an application for iOS and MacOS called Reality Composer, which allows for super easy creation of AR worlds with very high visual fidelity. This will become available for general release with ARKit 3.0 and iOS 13.

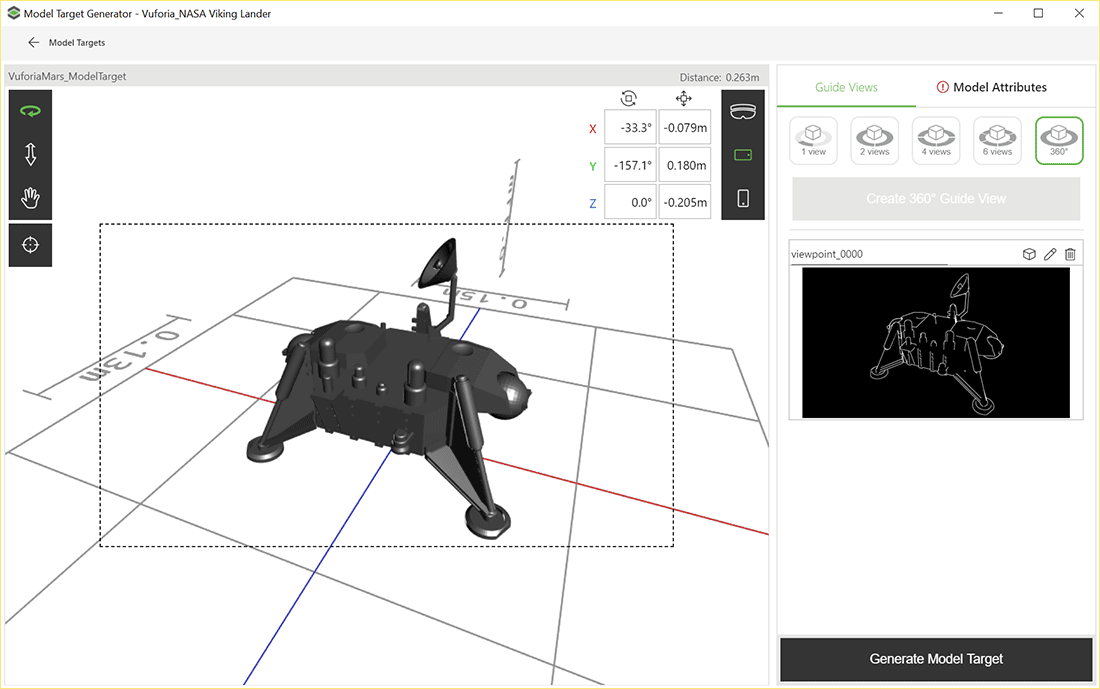

Vuforia Model Targets: Vuforia has long been known as an early player in AR, but they are significantly increasing their capabilities with a feature known as Model Targets, which associates AR content with a physical object. Their latest release is an enhancement known as Model Targets 360, which uses Machine Learning to dramatically reduce the time needed to set up a Model Target.

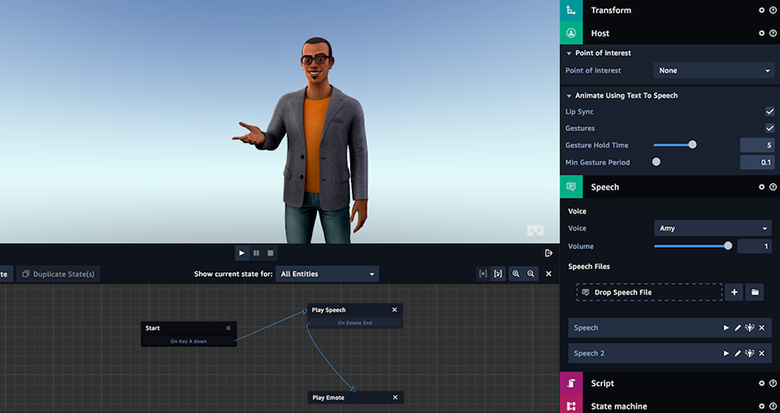

AWS Sumerian: Amazon’s Sumerian development environment is a web-based tool for creating AR and VR experiences that can be deployed to almost all AR hardware out there right now. While it took a while to get traction, there is now enough of an installed hardware base that it is really coming into its own.

Something that we find especially interesting is the integration with all the AWS services, including speech to text, face recognition and others, presented in the person of “hosts”, animated characters that guide a user through an experience.

Sensing users

Britelite has used a number of techniques to track users in a space, in order to provide fun and fluid interactions. We used the original Kinect sensor extensively (and still do), as well as Intel Realsense depth cameras. However, there are some interesting developments, which we are planning on rolling out in the coming year.

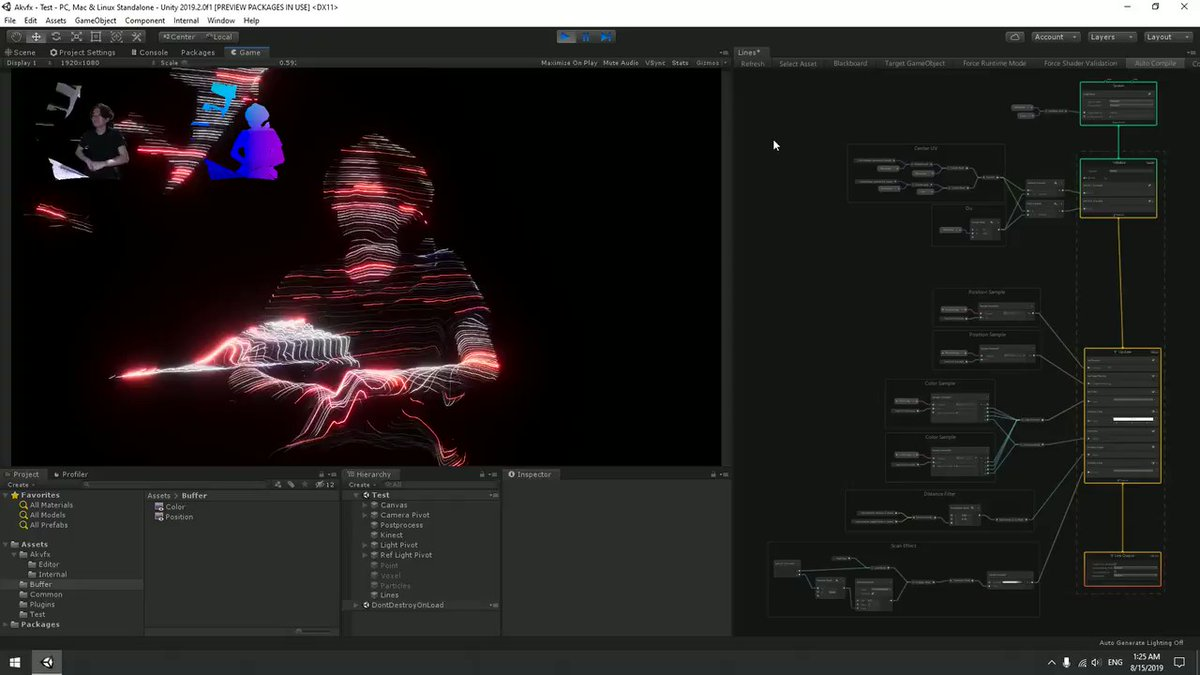

Azure Kinect: Microsoft’s Kinect was first released as a peripheral for their XBox game system, and then became very popular for implementing gesture based interactions. Everyone was surprised when it was taken off the market in 2017, but this year Microsoft has made available a successor, the Azure Kinect. Unlike the previous versions, the Azure Kinect is squarely focused on application developers, and uses AI to improve detection of people and gestures. Of particular interest at Britelite, several Kinects can be networked together to easily detect many people in large spaces.

Some of the creative technologists that most inspire us are already hard at work testing this system, including Keijiro Takahashi of Unity.

LIDAR: If you live in the SF Bay Area, you are probably deeply familiar with the sight of self-driving cars being tested, and in particular the little spinning cylindrical objects that seem to be all over them.

These are LIDAR (Light Detection and Ranging) sensors, and they are very good at detecting people and other objects in space. While LIDAR sensors used to be extremely expensive, on the order of $75,000, extreme interest from automotive and robotics companies has dramatically reduced their price, in some cases to just hundreds of dollars.

We are seeing more and more use of LIDAR as a supplement or alternative to depth cameras for some types of interaction sensing. If they are good at keeping cars from hitting people, they are also good for understanding what people are doing!

The ever-impressive TeamLab group is increasingly using LIDAR in their projects. Their very popular SketchTown installation at the Children’s Creativity Museum now uses LIDAR instead of Kinect sensors, with vastly improved performance.

We will certainly be exploring how LIDAR can bring interactivity to large surfaces and installations.

Realtime graphics

RTX graphics: This is one we’re really excited about! RTX from Nvidia is a set of related technologies, that allows for incredibly realistic realtime rendering of computer generated scenes.

At Britelite we use computer graphics extensively, as part of installations and to preview work for our clients. RTX allows for unprecedented levels of realism in flythroughs, both screen-based and in VR.

Moreover, we are increasingly finding that renders of videos and still images that use to require an overnight job can now be delivered almost immediately, which opens up many more use cases for us.

VFX Graph (Unity): We are big users of the Unity software development environment, and were very intrigued with the announcement of the Visual Effects Graph last year, a system for quickly putting together visual effects without writing code.

This feature is now part of the stable release of the Unity Editor, and we look forward to putting it to use.

Even the four-legged members of the Britelite team are excited about VFX Graph.

Lighting

As our name implies, we often integrate responsive lighting in our projects. We’ve delivered some large-scale LED projects recently, and are following trends in the LED lighting space closely.

Multi-element LEDs: Most full-color LEDs to date are based on red/green/blue elements (RGB), but this can limit the color palette, especially when it comes to brightness. A trend from various LED suppliers is to provide additional channels, most typically RGB + W, which is red/green/blue plus a dedicated, super bright, white LED.

However, there are many other possibilities, and we are seeing some creative use of RGB + A (amber) in large scale projects. Leo Villareal, famous as the creator of the Bay Lights, is now using RGBA LEDs in his vast London project. He notes that the true richness of the colors can’t actually be photographed!

We are working closely with our suppliers to explore additional possibilities to cast ever brighter lights on our immersive projects!

Come with us!

Are you interested in creating experiences for your organization that have never been seen before? Do the technologies described in the article sound interesting, but you need someone to help you understand what they can do for you?

It is important to understand that all of the things we’ve mentioned work best together, as an integrated installation. Improved graphics, user detection, new software: all of these support and enhance each other. At Britelite, we strive to go “beyond the demo”, and bring all these shiny new things together in projects that are designed to work and last.

If this sounds good to you, get in touch, and come visit to see what we are up to!

Britelite Immersive is a creative technology company that builds experiences for physical, virtual, and online realities. Read more about our capabilities or view our work.